AARP Hearing Center

In this story

Homework questions • Ethics • Educate yourself • Discover together • School rules? • Don't cheat • Learn prompting • Be polite • Learn job skills

A year or so ago, my teenage son, Samuel, asked if allowing ChatGPT to edit his high school essay would be appropriate.

Sam quickly pointed out that he didn’t want the artificial intelligence (AI) chatbot to write the paper. He wanted the bot to improve what he had written.

I was inclined to say no, but the question gave me pause: Was his request much different from asking me to look over an assignment and provide a few pointers, which many parents do? Was it different from summoning a spellchecker or grammar software?

Learn more

Senior Planet from AARP has free online classes to help you discover more about artificial intelligence.

These questions recall debates from decades ago about using calculators for math homework. Nowadays, calculators and even spreadsheets are widely deployed in classrooms.

Parallels can be applied to the generative AI chatbots that have been arriving at breakneck speeds in the past year and a half, notably OpenAI’s ChatGPT, Anthropic’s Claude, Google’s Gemini (formerly Bard) and the refreshed Microsoft Bing with Copilot. Such uber-powerful tools can generate words and sometimes images from scratch, based on the prompts fed to them, including generally satisfactory school essays.

AI technology introduces new ethical dilemmas

When does allowing a kid to lean too heavily on cutting-edge tech become cheating? What should parents and grandparents tell youngsters about the acceptable use of AI?

Artificial intelligence is definitely a topic for conversation but definitive answers are hard to come by. Grownups are trying to get a grip on the effect generative AI bots will have on society — and the ethical and legal challenges that accompany them.

Teens are torn as well.

About a fifth of U.S. teenagers who are aware of ChatGPT and nearly a quarter of 11th and 12th graders say they’ve used the AI to help their schoolwork, according to a fall 2023 survey from the Washington-based nonpartisan Pew Research Center.

About 7 in 10 say working with ChatGPT to research something new is acceptable, compared with 13 percent who reject that idea and 18 percent who are unsure.

Students and older generations confront the same issues:

- Are AIs producing factual and up-to-date information?

- Are the sources behind the information, assuming you can identify them, biased?

- Do you have the right to use what an AI spits out in your school or professional work?

- Are proper citations or credit included?

Google’s Jack Krawczyk, who oversees what is now Gemini, stresses that AI prompts differ from search queries. In layman’s terms, generative AIs look for common language patterns, not facts, inside vast reservoirs of data known as large language models or LLMs.

“What this technology does that is so fundamentally different from everything else, and why I think we’re struggling, is we’re so used to computers giving us answers,” Krawczyk says. “Tools like Bard [Gemini] give us possibilities.”

Educators have some guidance about exploring AI with your kids:

1. Educate yourself first

Before you can navigate AI with your child, make sure you as a parent or grandparent are up to speed.

More From AARP

Can Artificial Intelligence Solve Caregiving’s Crisis?

AI may detect changes before a problem can get worseQuiz: Can You Tell What’s Real and What’s AI?

Artificial intelligence is still mystifying despite all the attention it gets

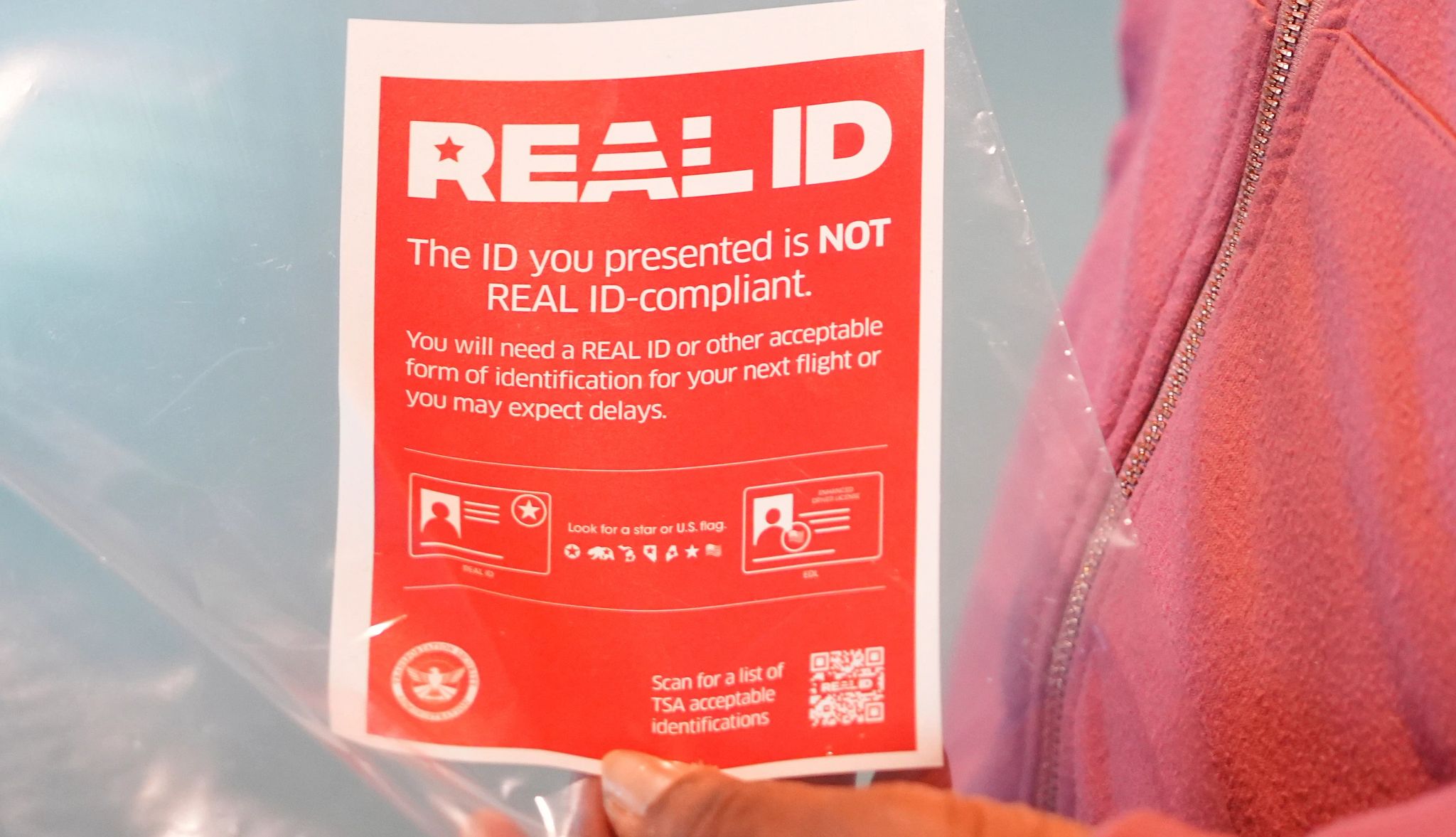

New Tech Tools Protect Consumers From Identity Fraud

Emerging fraud-fighting technologies make it easier to separate impostors from actual customers

Recommended for You