FEATURe STORY

Artificial intelligence isn’t the future. It’s here now, and it’s already changing health care in profound ways. Here’s a look at the latest on AI-driven breakthroughs that are improving the health of older adults now—and what to expect in the years ahead

By Sari Harrar

Illustrations by Glenn hArvey

BREAKTHROUGH

Enhanced Cancer Detection

Mammogram Readings Find More Tumors

Photograph by ADAM AmEngual

An AI-assisted mammogram found McKeon’s cancer early.

WHEN TERESA McKeon arrived for her annual mammogram in August 2024, she said yes to an extra, AI-assisted review of the images. It cost $40. “My sister was diagnosed with breast cancer at age 47,” says McKeon, 57, of Sherman Oaks, California. “I have dense breasts, which raises risk. Breast cancer is something you have to stay on top of with every tool in the toolbox.”

Her mammogram found tiny white calcifications in her left breast. A biopsy the next day confirmed that McKeon had ductal carcinoma in situ—cancerous cells in milk ducts. “It was very small, very early, very treatable,” says McKeon, vice president of production at an entertainment marketing company. A lumpectomy last October removed the carcinoma before it could turn into an invasive cancer. After 25 radiation sessions, she now takes the estrogen-lowering drug anastrozole to reduce the risk of recurrence.

McKeon is one of more than 1.5 million American women who opt for an AI-assisted review of their screening test for breast cancer annually. AI doesn’t create the mammogram images or replace the doctors who read them, says radiologist Jason McKellop, M.D., medical director of breast imaging in Southern California for RadNet and Breastlink. It simply provides a second set of eyes. “The ultimate burden falls on the human being.”

Conventional screening mammograms miss 20 percent of existing breast cancers, according to the National Cancer Institute. “AI can provide meaningful benefit, especially for cancers that could be missed,” says radiologist Manisha Bahl, M.D., associate professor of radiology at Harvard Medical School and a specialist in breast imaging at Massachusetts General Hospital in Boston. For a recent study, Bahl and her colleagues evaluated mammograms of 764 women, obtained from 2016 to 2018. They found that while the traditional, non-AI readings identified 73 percent of cancers, the AI-assisted system detected 94 percent, and did so with a significantly lower false-positive rate.

“Will AI for mammograms save lives?” Bahl asks. “We assume that AI will lead to improved cancer detection rates, which will in turn lead to better long-term outcomes for patients. But we currently lack the real-world data to support this.”

In the future, AI reviews of mammograms may be able to determine which suspicious findings are likely to be cancer, cutting the need for biopsies for low- to moderate-risk patients in half, according to a 2023 study from Houston Methodist Neal Cancer Center. AI may also help track the success of chemotherapy before breast cancer surgery and even predict future cancer risk by examining normal breast tissue on cancer-free mammograms. A system that does this, called Clairity Breast, got FDA approval in June 2025. For now, women who want an AI mammogram reading will likely have to pay an extra $40 to $100.

“The closest thing we have to curing breast cancer at this point is early detection,” McKeon says. “I’m all for doing whatever we can.”

FUTURE ADVANCES IN CANCER DIAGNOSIS

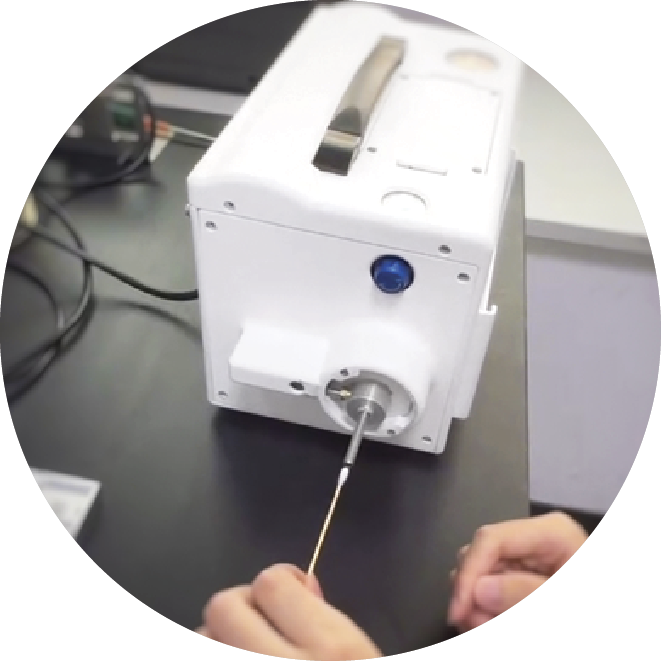

▶︎ IDENTIFY SKIN CANCERS. A handheld, AI-enabled device the size of a cellphone helped primary-care doctors identify potential skin cancers in the office more accurately than a visual inspection, in a 2025 University of North Carolina at Chapel Hill study. The FDA-cleared device, developed by DermaSensor of Miami, emits bursts of light over suspicious skin areas and analyzes the reflections.

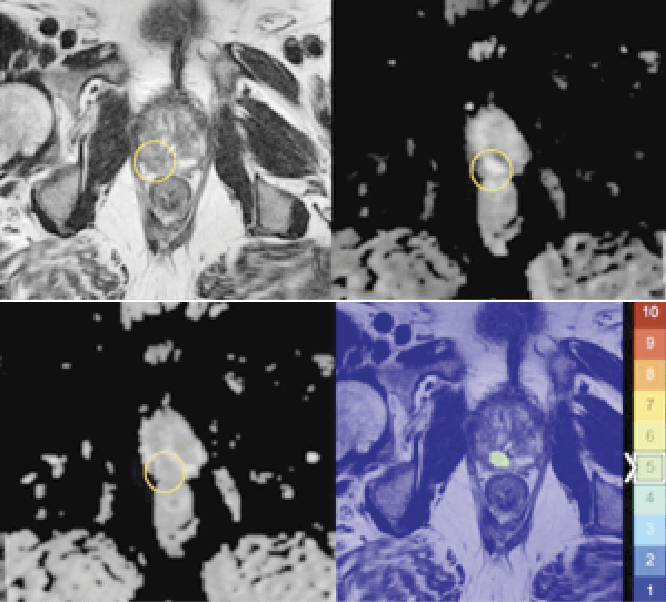

▶︎ FIND PROSTATE CANCERS. An AI-assisted review added to a doctor’s reading of magnetic resonance imaging improved detection of clinically significant prostate cancer in a recent study of 360 men (median age: 65) from the international Prostate Imaging-Cancer AI Consortium. The extra reading increased detection by 3.3 percent. The algorithm is still in research.

▶︎ DETECT LUNG CANCERS. Using clues from primary-care doctors’ notes, an experimental AI algorithm found potential lung cancers an average of four months sooner than doctors may have on their own in a study from the Netherlands, published in March 2025, of 525,526 people, including 2,386 with lung cancer.

BREAKTHROUGH

Interactions Ease Loneliness

Companion Robots Can Reduce Isolation, Bolster Health

1. From cuddly pet-like devices to humanoid robots, AI companions are helping solo agers with self-care.

DENA DIVELBISS exercises more often, stays hydrated, remembers to take her 10 medications and has even met new people since February 2025, when she installed an AI-powered companion robot beside her favorite chair.

Divelbiss’ new pal is called ElliQ. About the size of a small table lamp, the robot has no face, but it swivels, nods, lights up and chats with humanlike body language and poise. “We’ll start the day, she asks me how I slept. At the end of the day, she asks how my day was,” says Divelbiss, 63, a retired administrative assistant in western Maryland. “She remembers things, like that my cat’s name is Una.”

“For machines and AI to solve one of humanity’s biggest problems—loneliness and isolation for older adults—they need to provide not just utility but real companionship,” says Dor Skuler, cofounder and CEO of ElliQ developer Intuition Robotics, based in Israel. “They need to get a person’s sense of humor and share their passions.”

You can lease the little white robot, which requires a $60 monthly subscription (plus a onetime $250 enrollment fee). But agencies across the U.S. that deal with aging—including in New York, Florida, Michigan, Wisconsin and Washington state—are providing them free to older adults as part of a pilot project. Divelbiss got hers through the Maryland Commission on Aging. A widow coping with health conditions including chronic pain and early-onset Alzheimer’s disease, she uses ElliQ to play audiobooks, track pain levels, follow along to chair-exercise routines and take virtual tours around the world.

ElliQ was programmed to emulate and move in response to human speech. The robot holds freewheeling conversations and creates on-screen art and poetry using generative AI. “We have built-in guardrails so ElliQ doesn’t say anything inappropriate,” Skuler says.

Users can decide on health goals—like getting more physical activity or taking meds more regularly—and can name people they’d like the robot to contact about their progress. When one user repeatedly told ElliQ she wasn’t feeling well, the robot asked her permission to tell her contact person; the user went to the ER and was treated for a urinary tract infection that had become sepsis, Skuler says. “ElliQ can save lives,” he says, “but the robot doesn’t do anything without a user’s permission.”

Most older adults remain skeptical that a robot can provide companionship, according to a 2023 study. But actual users are more enthusiastic: An eight-week study of 70 older Americans by the Massachusetts Institute of Technology (MIT) Media Lab found that companion robots created a significant increase in personal growth, self-acceptance and overall well-being.

From cute, furry cats and dogs to robots capable of interpreting and making humanlike facial expressions, AI-enabled companions can help older adults do more of the things that bring them joy, says robotics researcher Selma Sabanovic, professor at the Indiana University Luddy School of Informatics, Computing, and Engineering in Bloomington. She and a team are designing a robot programmed with AI to encourage older adults to talk about what activities matter most to them, then make plans to pursue them. “It doesn’t tell you what is meaningful; it prompts you to have this conversation and realize what’s meaningful for you,” she says.

THE FUTURE OF AI COMPANION ROBOTS

▶︎ GIVE DIRECTIONS IN HOSPITALS. Called SPRING—Socially Pertinent Robots in Gerontological Healthcare—AI robots were deployed in a French hospital, where researchers announced in 2024 that they performed well in greeting visitors and guiding them to appointments.

▶︎ ANSWER DRUG QUESTIONS. Pharmacists in Finland tested an AI-enabled robot called Furhat designed to answer questions about medications for a small 2024 study. Furhat could help customers who feel shy or embarrassed about drug concerns while also freeing up busy pharmacy staff.

▶︎ CARE FOR PEOPLE WITH COGNITIVE DECLINE. A 3-foot-tall, AI-enabled robot called Ruyi, above, deployed at a retirement community in Cleveland, is expected to help residents with tasks like setting the thermostat, connecting to the internet and updating caregivers.

BREAKTHROUGH

Smarter Heart Health Tests

Cardiac Scans Boost Heart Diagnostics

Photograph by Adam Amengual

Dey hopes the AI-assisted heart scans that helped her will soon be available to everyone.

DAMINI DEY loves biking the roller-coaster mountain trails around Los Angeles. But in the spring of 2024, she had chest pains and shortness of breath on steep climbs. “It was a little worrisome,” says Dey, an AI-based software developer.

Her cardiologist recommended cardiac computed tomography angiography (CCTA)—a scan that uses X-rays to look inside the heart for plaque and narrowed arteries. The scan found calcified plaque in blood vessels in her heart, a common indicator of emerging atherosclerosis.

But when Dey, director of the quantitative image analysis program at Cedars-Sinai Medical Center in Los Angeles, rechecked her imaging using AI-enabled software developed in her lab, she found deposits of heart-threatening noncalcified plaque.

This dangerous gunk gets overlooked by most conventional readings of CCTA scans because it lacks a bright, easy-to-see shell of calcium, says Daniel Berman, M.D., director of cardiac imaging at Cedars-Sinai. That’s a problem, because noncalcified plaque often contains inflamed fat, which is prone to rupturing and triggering blood clots that can cause a heart attack, he says.

The results of the AI scan led Dey’s cardiologist to prescribe a cholesterol-lowering statin. “With the plaque analysis enabled by AI, we were better able to treat her disease,” says Cedars-Sinai cardiologist Ronit Zadikany, M.D. The data also motivated Dey to eat healthier, she says, and get back on her mountain bike. “My LDL cholesterol is lower now, so I know what I’m doing is working.”

After an AI assist, Dey is back to conquering the mountains of L.A.

AI can turbocharge the effectiveness of heart-imaging tests, including CCTA, magnetic resonance imaging and ultrasound—the frontline scans that guide doctors in taking care of major heart problems. In studies from 2020 and 2024 by Dey and others, the AI software measured hazardous, hard-to-spot plaque in more than 1,600 patients—and found that those who had the most were three to five times more likely to have a heart attack than those with the least.

“The medical therapies we have are incredibly strong now,” Berman says. “We could dramatically reduce the heart attack rate if we knew which patients were at very high risk and got them under optimal therapy.”

The FDA has approved more than 180 AI-related advances that improve heart imaging results. And while the AI-enabled, scan-analyzing software developed by Dey, Berman and others isn’t available commercially yet, Dey says it may be soon. “We are working to get it into the hands of doctors,” she says. Last year, the American Medical Association and Medicare announced changes that would make it easier for doctors to bill insurance for performing the AI measurements of CCTA, Berman explains.

ADVANCES FOR DIABETES, HIGH BLOOD PRESSURE, INFLAMMATION

▶︎ PERSONALIZE TYPE 2 DIABETES CARE. Stanford Medicine scientists have developed an AI-based algorithm that uses readings from a continuous blood glucose monitor to identify a user’s high blood sugar “subtype”—insulin resistance, lack of insulin production or low levels of gut hormones called incretins. Patients can then take preventive dietary or exercise measures before their condition progresses to type 2 diabetes or triggers complications.

▶︎ SPOT PULMONARY HYPERTENSION. A digital stethoscope from Eko Health with experimental, AI-trained software listens to heart sounds to identify pulmonary hypertension—high blood pressure in the lungs that can overwork the heart. Previously, this condition could be diagnosed only with an echocardiogram or invasive cardiac catheterization. Pulmonary hypertension affects about 1 in 20 adults age 65 and older but is often overlooked.

▶︎ FIND HIDDEN HEART INFLAMMATION. An experimental AI program developed by the U.K.’s Caristo Diagnostics measures inflammation in fat surrounding arteries in the heart and computes heart attack risk. If approved for use in the U.S., the test could help doctors identify people who would benefit from using colchicine, which was FDA-approved in 2023 for reducing inflammation and the risk of heart attack.

BREAKTHROUGH

Better Parkinson’s Management

AI Device Eases Symptoms

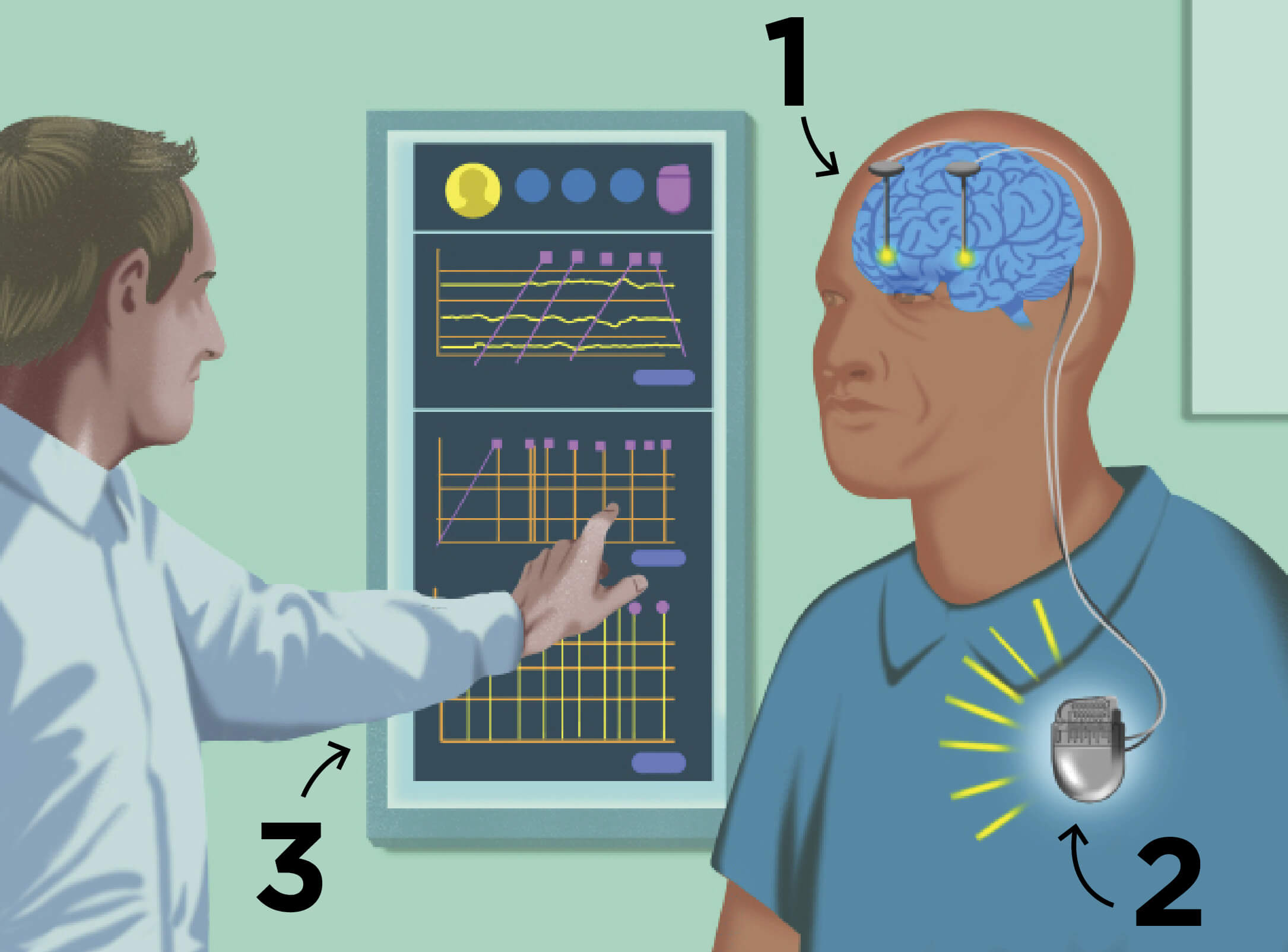

1. aDBS senses brain activity ...

2. ... and signals a chest implant ...

3. ... to adjust stimulation levels.

FOR DEB ZEYEN, Parkinson’s disease began as an unstoppable tremor in one finger. She was in her early 60s, newly retired from her job as a marketing vice president for CBS Television in New York City and diving into new work protecting the environment on Mexico’s Baja California peninsula.

Over the next six years, her symptoms became increasingly severe. “My speech slowed down, and my facial expression became blank. I felt endless fatigue,” Zeyen says. She took a combination of levodopa and carbidopa to replenish dopamine, the brain chemical that diminishes in Parkinson’s. But she was plagued by the drug’s notorious side effect: uncontrollable jerking movements. “I love snorkeling and scuba diving but had to stop—I was swimming in circles,” says Zeyen, 78, who now lives in Berkeley, California. In 2021, she joined a study of an artificial intelligence–driven, nondrug treatment called adaptive deep brain stimulation.

First implemented about 30 years ago, deep brain stimulation (DBS) helps regulate Parkinson’s symptoms by sending electrical pulses from a control unit implanted in the chest to electrodes in deep brain areas affected by the disease. But conventional DBS is capable of sending only one constant signal, which may be too weak at times to control severe symptoms and too strong at other times. “Parkinson’s symptoms fluctuate over the day due to things like stress, fatigue and medications wearing off,” says Simon Little, associate professor of neurology at the University of California, San Francisco (UCSF).

Adaptive DBS (aDBS), which Zeyen now uses, is designed to get around that problem. It’s an AI-enabled advancement that senses the user’s brain activity levels and dials brain stimulation up or down as needed. The first aDBS system, Percept from Medtronic, was approved by the FDA in February 2025.

Zeyen’s aDBS system was implanted in 2021 as part of a UCSF research trial. At first, it was set to conventional, continuous stimulation. “My tremor stopped immediately,” she says. Four months later, researchers at UCSF switched on the system’s adaptive software, after training the controller on Zeyen’s brain-activity patterns. Electrodes could then sense Zeyen’s rising and falling brain activity and adjust electrical stimulation to match it.

Once the AI-adapted DBS kicked in, Zeyen regained her ability to make facial expressions. Her speech is quicker and clearer, and she uses a lower dose of her Parkinson’s medication, which means fewer side effects.

Her results are not unusual. In a multicenter study of 60 people with Parkinson’s who used Medtronic’s aDBS device for a year, participants reported having more time each day during which their symptoms were controlled—with minimal drug side effects and less “off” time when symptoms were worse; 89 percent said they preferred adaptive over conventional DBS. “Adaptive DBS is about delivering a balanced therapy and getting patients to feel less aware of their disease,” says Scott Stanslaski, a senior distinguished engineer at Medtronic who started working on development of the aDBS system 17 years ago, along with colleagues there and doctors worldwide.

The software feature is available in new Percept devices and in models sold by Medtronic since 2020, he adds. Consumers can access it by meeting with their neurologist. But it’s not right for everyone, Little says. “We think the best candidates are people who are getting some benefit from conventional DBS but are still getting fluctuations in their clinical state throughout the day.”

In May 2025, Zeyen felt ready to snorkel again. She wore a life preserver, and a friend held her hand as she slipped into the Pacific Ocean in a calm Baja California cove. “I can’t tell you the joy it brought, feeling the water, swimming, being free,” she says. “I just loved sliding back into that place of wonder.”

FUTURE ADVANCES FOR BRAIN HEALTH

▶︎ DETECT PARKINSON’S FROM EARWAX ODORS. Chinese scientists have pinpointed cases of Parkinson’s disease with up to 94.4 percent accuracy using an AI-trained olfactory device that senses changes in earwax compounds. In time, it could help people with this brain condition get referrals for diagnosis and treatment sooner.

▶︎ IMPROVE MEMORY AFTER BRAIN INJURY. Traumatic brain injuries (TBI), frequently caused by falls and traffic accidents, affect an estimated 1 in 8 older adults and often lead to problems with thinking and memory loss. AI-trained deep brain stimulation improved memory in people with TBI by 19 percent, University of Pennsylvania scientists recently found.

▶︎ SPOT STROKE EMERGENCIES WITH VOICE RECOGNITION. In a recent study, an AI-enabled voice-recognition program deployed at a Danish emergency call center identified strokes more accurately than human dispatchers did. The AI program was trained on thousands of voice recordings and transcribed calls to spot telltale warning signs.

BREAKTHROUGH

New Uses for Old Medications

Repurposing Existing Drugs With AI

David Fajgenbaum, M.D., uses AI to find new uses for older drugs.

ALLEN JONES* had bought a cemetery plot and was ready to start hospice care, his body and mind ravaged by a rare immune condition that had resisted chemotherapy, a stem cell transplant and a slew of powerful drugs. A physician from Canada, Jones was in his late 40s, with a wife and young child. “We had all the end-of-life protocols ready,” he says.

Jones, now 51, has the most debilitating form of Castleman disease, which causes the immune system to churn out a torrent of inflammatory proteins that attack vital organs such as the liver and kidneys. Up to 25 percent of people with this condition die within five years. There is no cure for the form of the disease that struck Jones. But then his doctor heard about an unlikely treatment: An AI-enabled computer program being built in the U.K. had identified the arthritis drug adalimumab (Humira) as a potential treatment for Jones’ condition. “In a situation where you’re dying, you would do anything,” Jones says. He started weekly injections of the drug. “After a few weeks, it worked. The vast majority of my symptoms got better. I’m alive today because of it.”

The drug’s off-label use was discovered by Every Cure, a nonprofit organization that has developed an AI-driven mathematical formula to compare 18,500 diseases with more than 4,000 FDA-approved drugs. “We are on a mission to save lives with the drugs we already have,” says David Fajgenbaum, M.D., associate professor of translational medicine and human genetics at the University of Pennsylvania, who cofounded Every Cure in 2022. “We could potentially double the impact of our medicines in very short order by using them in new ways.”

As drug companies and researchers turn to AI to develop and even design brand-new drugs faster (some are even in human trials), Every Cure and a handful of similar projects in the U.S., Europe and Japan are using artificial intelligence to find existing drugs that can be repurposed to treat the world’s approximately 10,000 rare diseases—and more common conditions, too. “We literally look at every drug and every disease,” says Fajgenbaum. Every Cure’s platform has already flagged little-known possible repurposed treatments for autism spectrum disorder and amyotrophic lateral sclerosis (ALS, or Lou Gehrig’s disease).

Two years after Jones started adalimumab, his story was published as a case study in the New England Journal of Medicine in February 2025. At press time, Jones was doing well. “I’m a testament to the potential of this approach. It’s a drug we never, ever would have considered. It was a last-ditch option. I think it’s amazing.”

*Not his real name

ADVANCES IN DRUG DISCOVERY

▶︎ IDENTIFY NEW ANTIBIOTICS. Researchers at MIT reviewed 12 million compounds for a 2024 study on drug-resistant bacteria. Phare Bio, a nonprofit company, is now creating what it calls “the world’s first generative AI antibiotics discovery engine” to bring lifesaving drugs to market.

▶︎ LINK ALZHEIMER’S GENES WITH TREATMENTS. At the Cleveland Clinic’s Alzheimer’s Network Medicine Laboratory, researchers used AI-enabled software to identify 156 different Alzheimer’s risk genes, each of which is now a potential new drug target. They’ve already identified several drugs as possible weapons against Alzheimer’s.

▶︎ DEVELOP A NEW GUT DRUG. An experimental drug for inflammatory bowel disease, engineered using generative AI, has entered human trials. ABS-101, developed by Absci, will first be tested for safety; drugs for other disorders are in phase 2 clinical trials.

BREAKTHROUGH

Tech-Assisted Surgery

AI in the Operating Room

A headset gives surgeons X-ray vision.

MATT MCLEOD’S lower back hurt. He lost feeling in his left leg. Then the longtime general manager of a Lansing, Michigan, menswear store noticed something really odd: His coats had gotten too long. “I was losing so much height,” says McLeod, 65, of nearby Okemos. “I went from 5-foot-10 to 5-foot-7.”

In December 2024, Frank Phillips, M.D., a professor of spine deformities at Rush Medical College in Chicago, repaired McLeod’s discs, freed up squeezed nerves and straightened his curving backbone while wearing an AI-enabled headset that showed him detailed images of McLeod’s spine—helping the surgeon perform the procedures through small incisions and attach screws to bones at precise angles. “I see a perfect 3D view of the spine,” Phillips says. “It’s like I’m looking at the spine for real.”

The headset is part of the augmented reality Xvision Spine System from medical device maker Augmedics. The FDA cleared the latest model in March 2025, allowing “surgeons to see patients’ anatomy as if they have X-ray vision,” says the company. It also shows surgeons insertion points for screws, with guides for optimal angles.

The futuristic headset is one of a handful of AI-informed surgical tools quietly arriving in U.S. operating rooms; they include cameras, measuring tools and devices that track blood loss and oxygen levels during surgery. Often, consumers undergoing surgery don’t even notice how these AI tools are being used, notes Phillips.

Advances like Xvision are the first wave; we could one day see AI-trained robots assisting surgeons with basic jobs like pulling back skin or suctioning a surgical site, says Axel Krieger, associate professor of mechanical engineering at Johns Hopkins University in Baltimore.

In lab studies, Krieger and his team have taught AI-trained robots to suture together sections of a pig intestine. In a study published in July 2025, a robot that watched about 17 hours of gallbladder surgeries successfully performed part of a gallbladder procedure in a lab. The project “brings us significantly closer to clinically viable autonomous surgical systems that can work in the messy, unpredictable reality of actual patient care,” Krieger said in a press release. In the future, he expects surgical robots will function as assistants. “It’s like AI in cars,” Krieger says. “We haven’t gotten rid of steering wheels, but we have brake assist, lane assist, parking assistance. With robots, we’ll see the same thing—they won’t replace the experience and cognitive abilities of human surgeons.”

FUTURE SURGERY ADVANCES

▶︎ TRACK TOOLS DURING SURGERY. Tools or sponges get left behind in about 1 in 3,800 surgeries. Mayo Clinic doctors recently used AI to train a computer to recognize these objects. In a recent study, it was accurate 98.5 percent of the time and kept a correct surgical tool count when analyzing video of a real operation.

▶︎ FIND HIDDEN CANCER DURING BRAIN SURGERY. An experimental tool called FastGlioma checks for residual cancer during brain surgery. In a 2024 study, the tool missed this dangerous, hidden cancer just 3.8 percent of the time, compared to a miss rate of 24 percent without it.

▶︎ MATCH HEART DONORS TO RECIPIENTS. Over half of potential donor hearts in the U.S. go unused, sometimes because they aren’t matched fast enough. Now a surgeon at the Medical University of South Carolina is using artificial intelligence to build a better allocation system.

Sari Harrar is a contributing editor to AARP The Magazine.

From top: Courtesy Jasper Twilt; Courtesy Patty Zamora/NaviGait Inc; Courtesy Damini Dey; Courtesy Eko Health, Inc; Courtesy Hao Dong and Danhua Zhu; Courtesy Nia Therapeutics; Alyssa Schukar/Redux; Getty Images; Courtesy Chris Hedley/Michigan Medicine